About the Iridis research computing facility

Iridis, the University’s High Performance Computing System, has dramatically increased computational performance and reach. Iridis is currently in its fifth generation and remains one of the largest computational faculties in the UK. In 2017, Iridis 5 joined the elite of the world’s top 500 supercomputers and is over four times more powerful than its predecessor. In 2024, we will be welcoming the sixth generation of Iridis (Iridis 6), featuring an impressive addition of over 26,000 CPU cores. This substantial upgrade effectively doubles the current computational capacity of the entire Iridis facility, marking a significant advancement in our computing capabilities and fostering innovation. Iridis 6 seamlessly replaces Iridis 4, which had just over 12,000 CPU cores, while coexisting with Iridis 5, forming a dynamic and versatile computing ecosystem.

Highlights list

- Iridis 5 comprises of 25,000+ processor cores, 74 GPU cards, as well as 2.2PB storage utilizing the IBM Spectrum Scale file system.

- Iridis 6 will comprise of 26,000+ AMD CPU cores, alongside high memory and login nodes with up to 3TB of memory and 15TB of local storage each.

- Dedicated nodes for visualisation software applications.

- Management of private research facilities including the School of Engineering’s deep learning computing cluster.

- Dedicated research computing system engineers for user support and training with an inclusive HPC facility supporting both research and teaching activities at the University.

- High performance InfiniBand network infrastructure for high-speed data transfer.

The images on this page were produced using the Iridis 5 high-performance computing (HPC) facility.

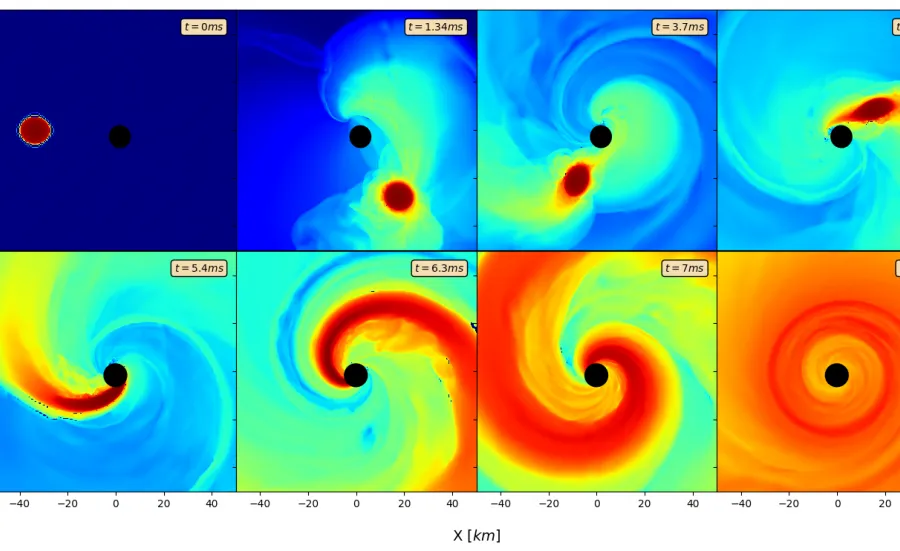

Black Hole - Neutron Star Mergers in General Relativity

This snapshot shows the evolution of the maximum rest-mass density on the x-y plane over a period of 10 millisecond timescale. 1.4 M irrotational neutron star is being tidally disrupted by 7 M black hole whose dimensionless spin parameter is 0.7. It took 100 hours to complete this simulation using 360 cores on Iridis5.

Research Group: Mathematical Sciences / Applied Mathematics and Theoretical Physics

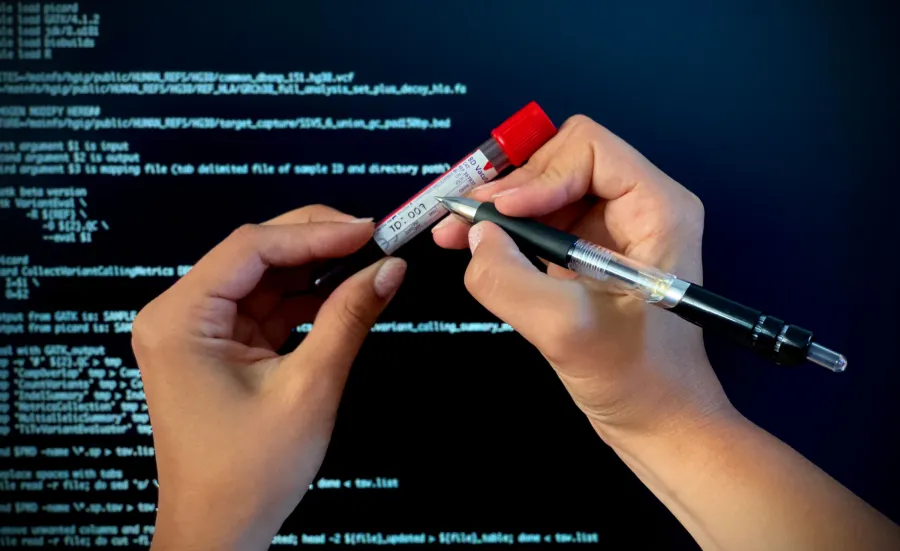

Big Data Analysis for Disease Understanding and Prevention

We generate massive data detailing genomic changes in human DNA. Massively parallel analyses of these data using statistics and machine learning help us better understand which genetic changes can cause or predispose to disease. Our research aims to better diagnose, predict and prevent ill health.

Research Group/Department: Human Genetics and Genomic Medicine

Daniela Mihai

Sketch of city landscape based on a photograph of Vancouver's skyline. The image is a rasterised line sketch produced through our method for differentiable sketch generation, implemented entirely as GPU-accelerated tensor operations in PyTorch. Rasterisations of primitives, such as lines, points and curves, can be composed in different ways (so long as they are differentiable) to achieve a resultant output which can be optimised.

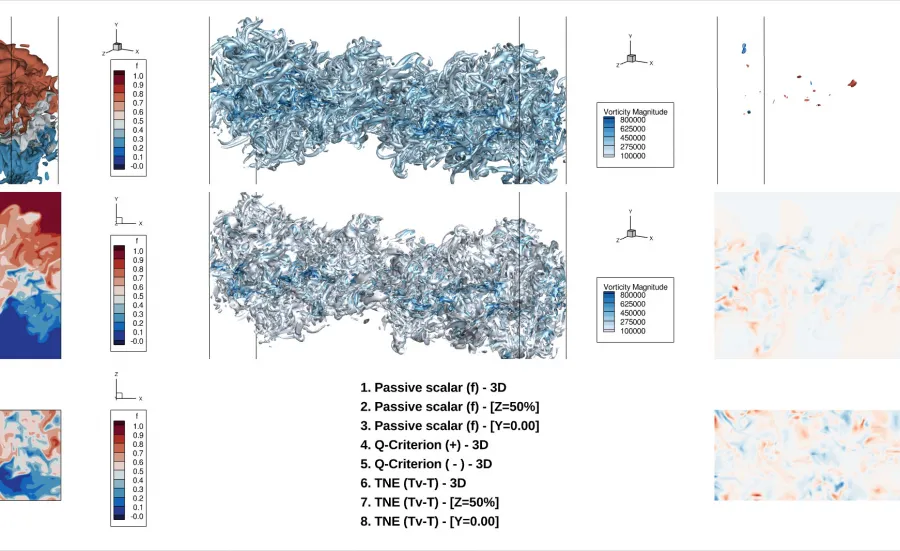

High Enthalpy Effects in Shear Layer Breakdown to Turbulence

The image is a rendering of DNS computation of a mixing layer showing two flows moving in the opposite direction at very high velocities. The simulation is done to show the development of vortices during their roll-up in the shear-layer, demonstrating the non-equilibrium phenomenon occurring in hypersonic flows and their influence on turbulence.

Research Group/ Department: Aeronautical and Astronautical Engineering

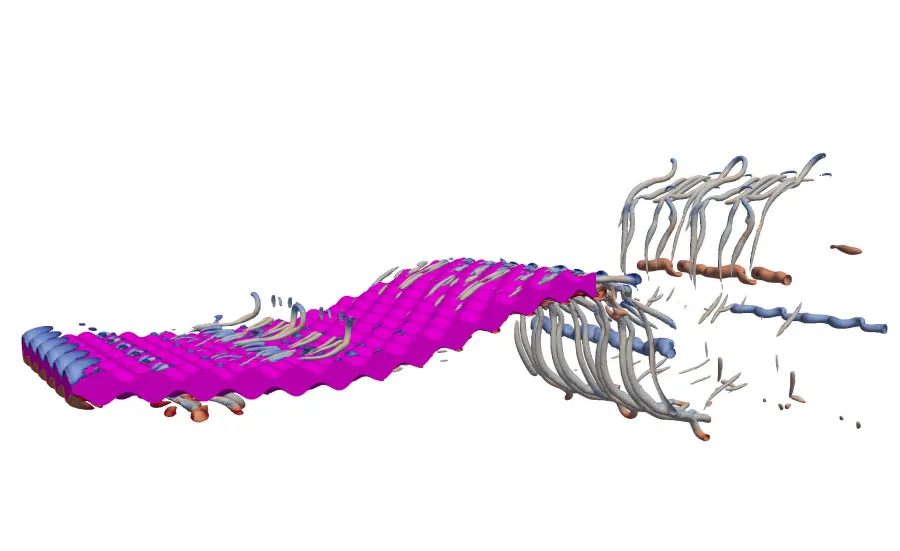

The Impact of Surface Texture on the Hydrodynamics of Aquatic Locomotion

A flat plate with egg-carton roughness swimming with realistic fish kinematics.

Department/Research Group: Engineering

Citation: Massey, J., Ganapathisubramani, B. and Weymouth, G., 2022. A systematic investigation into the effect of roughness on self-propelled swimming plates. arXiv preprint arXiv:2211.11597.

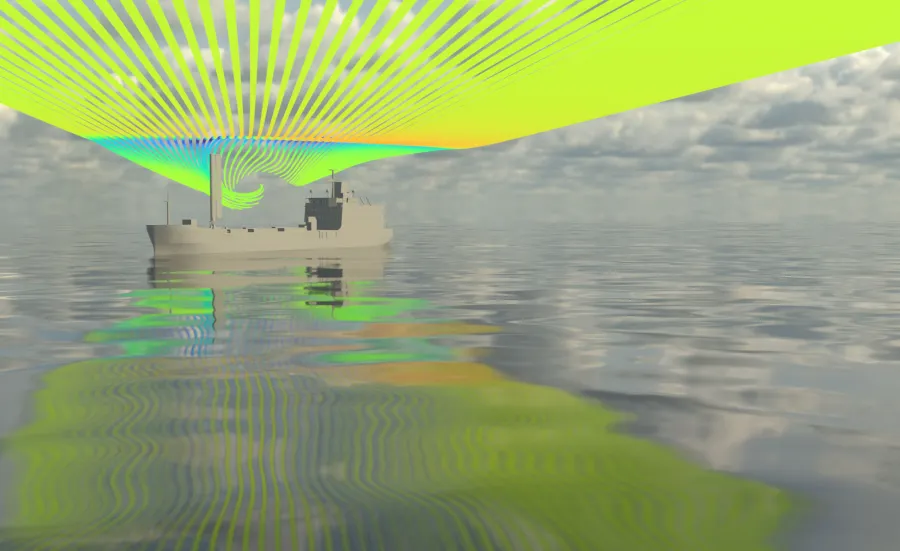

CFD simulation to determine aerodynamic efficiency of solid wing sail on cargo vessel

Description: CFD simulation to determine aerodynamic efficiency of solid wing sail on cargo vessel

Research Group/Department: Wolfson Unit MTIA